“Most histories of medicine are strikingly odd,” notes the British physician and author Druin Burch. “They provide a clear account of what people believed they were doing, but almost none at all of whether they were right.”

Did the ostrich egg poultices applied by ancient Egyptian physicians actually heal head fractures? In ancient Mesopotamia, did the treatments of the Keeper of the Royal Rectum actually keep royal rectums healthy? And what about bloodletting? Everyone from the ancient Greeks to George Washington’s doctors swore that it was wonderfully restorative—but did it work? The standard histories are usually mute on that score.

Until very recently (in historical terms), it was not unusual for a sick person to be better off without an attending physician—because letting an illness take its natural course was less dangerous than anything a doctor might inflict. When Washington fell ill in 1799, his esteemed physicians bled him relentlessly, dosed him with mercury to cause diarrhea, induced vomiting, and raised blood-filled blisters by applying crushed beetles to the old man’s skin. A physician in Aristotle’s Athens, or Nero’s Rome, or medieval Paris, or Elizabethan London would have nodded at much of that hideous regimen.

Washington died—though, to be fair, this fact alone proves nothing about the treatments beyond that they failed to prevent his death. It’s possible that the ministrations helped, just not enough to overcome the disease that took Washington’s life; or that they didn’t help at all; or even that they hastened death. But if the physicians were already inclined to think their methods worked—which they were, or else they wouldn’t have used them—all that ambiguity was likely to be read in favour of the happy conclusion that they really were effective. It takes strong evidence, and experimentation more rigorous than the “bleed the patient and see if he gets better” variety, to overwhelm preconceptions. Before the modern age of scientific inquiry, alas, that was never done.

Consider Galen, the second-century physician to Roman emperors. No one has influenced more generations of doctors. His writings were the indisputable source of medical authority for more than 1,000 years. “It is I, and I alone, who have revealed the true path of medicine,” he wrote with his usual air of modesty.

And yet Galen never conducted anything resembling a modern experiment. Why should he have? Experiments are what people do when they aren’t sure of the truth. And Galen was untroubled by doubt. Each outcome confirmed that he was right, no matter how equivocal the evidence might have appeared to someone less sophisticated. “All who drink of this treatment recover in a short time, except those whom it does not help, who all die,” he wrote. “It is obvious, therefore, that it fails only in incurable cases.”

Galen is an extreme example. But he is the sort of figure who pops up repeatedly in the history of medicine. They are men (always men) of strong conviction and a profound trust in their own judgment. They embrace treatments, develop bold theories for why those treatments work, denounce rivals as quacks and charlatans, and spread their insights with evangelical passion. So it went from the ancient Greeks to Galen to Paracelsus to the American Benjamin Rush and the German Samuel Hahnemann.

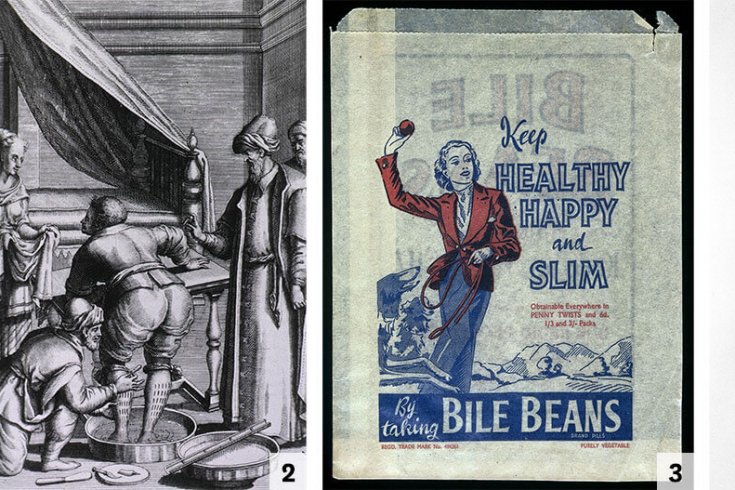

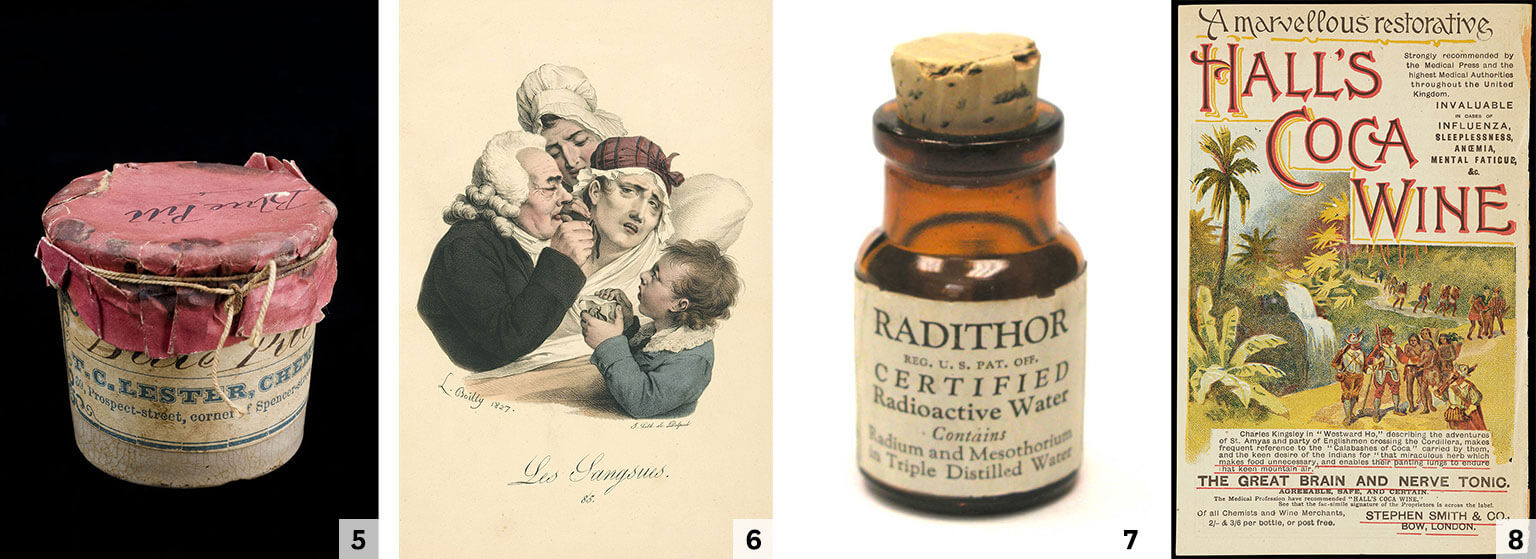

5 Blue pills with mercury, used to treat syphilis. 6 A doctor applying leeches to a Frenchwoman’s neck. 7 Early twentieth-century manufacturers guaranteed Radithor was “harmless in every respect.” 8 Coca wine was an all-purpose cure.[/caption]

5 Blue pills with mercury, used to treat syphilis. 6 A doctor applying leeches to a Frenchwoman’s neck. 7 Early twentieth-century manufacturers guaranteed Radithor was “harmless in every respect.” 8 Coca wine was an all-purpose cure.[/caption]

In the nineteenth century, American medicine saw pitched battles between orthodox physicians and a host of charismatic figures with curious new theories such as Thomsonianism, which posited that most illness was due to an excess of cold in the body, or the orificial surgery of Edwin Hartley Pratt, whose fundamental insight was that, as one detractor put it with only modest exaggeration, “the rectum is the focus of existence, contains the essence of life, and performs the functions ordinarily ascribed to the heart and brain.”

Fringe or mainstream, almost all of it was wrong. Some physicians feared as much, but most carried on with business as usual. As the surgeon and historian Ira M. Rutkow wrote, physicians who furiously debated the merits of various treatments and theories were “like blind men arguing over the colors of the rainbow.”

In 1747, a British ship’s doctor named James Lind took twelve sailors suffering from scurvy, divided them into pairs, and gave each pair a different treatment: vinegar, cider, sulphuric acid, seawater, a bark paste, and citrus fruit. It was an experiment born of desperation. Scurvy was a mortal threat to sailors on long-distance voyages, and not even the confidence of physicians could hide the futility of their treatments. So Lind took six shots in the dark—and one hit. The two sailors given the citrus recovered quickly.

But contrary to popular belief, this was not a eureka moment that ushered in the modern era of experimentation. “Lind was behaving in what sounds a modern way, but he had no real understanding of what he was doing,” notes Burch. “He failed so completely to make sense of his own experiment that even he was left unconvinced of the exceptional benefits of lemons and limes.” For years thereafter, sailors kept getting scurvy, and doctors kept prescribing worthless medicine.

Not until the twentieth century did the idea of randomized trial experiments, careful measurement, and statistical power take hold. “Is the application of the numerical method to the subject matter of medicine a trivial and time-wasting ingenuity as some hold, or is it an important stage in the development of our art, as others proclaim? ” The Lancet asked in 1921. The British statistician Austin Bradford Hill responded emphatically that it was the latter, and laid out a template for modern medical investigation. If patients who were identical in every way were put into two groups, and the groups were treated differently, he wrote, we would know whether the treatment caused any difference in outcome.

It seems simple. But it is impossible in practice, because no two people are exactly alike (not even identical twins), so the experiment will always be confounded by the differences among test subjects.

The solution lay in statistics: randomly assigning people to one group or the other would mean that whatever differences existed among them would roughly balance out if the sample set were sufficiently large.

This is all stunningly obvious today. Randomized controlled trials are now routine. Yet it was revolutionary then, because medicine had never before been scientific. True, it had occasionally reaped the benefits of science—such as the germ theory of disease and the X-ray. And it often dressed up as a science: there were educated men with impressive titles who conducted case studies and reported results in Latin-laden lectures at august universities. But it wasn’t actual science.

The critical ingredient medicine lacked was doubt. “Doubt is not a fearful thing,” physicist Richard Feynman famously observed, “but a thing of very great value.”

It is only when we have doubt in an existing hypothesis that we try looking in new directions for new ideas, and to devise objective means for comparing the worth of these new ideas. It was the absence of doubt that made medicine unscientific, and that caused it to stagnate for so long.

Unfortunately, the idea of randomized controlled trials was slow to catch on. Even after World War II, the physicians and scientists who were promoting the modernization of medicine routinely found that the establishment wasn’t interested, or even that it was hostile to their efforts. “Too much that was being done in the name of health care lacked scientific validation,” the epidemiologist Archie Cochrane complained of the 1950s and ’60s. The National Health Service—Britain’s health care system—had “far too little interest in proving and promoting what was effective.”

Physicians and the institutions they controlled didn’t need scientific validation. They just knew. Cochrane despised this attitude. He called it the god complex.

When hospitals created coronary care units to treat patients recovering from heart attacks, Cochrane proposed a randomized trial to determine whether the new units delivered better results than the old treatment, which was to send the patient home for monitoring and bed rest. Physicians balked. It was obvious that the coronary care units were superior, they said, and denying patients the best care would be unethical. But Cochrane was not a man to back down. As a prison-camp physician treating fellow POWs in World War II, he often stood up to the authorities. On occasion, he loudly berated trigger-happy German guards.

So Cochrane got his trial: some patients, randomly selected, were sent to the coronary care units, while others were sent home to convalesce. Partway through the trial, Cochrane met with a group of the cardiologists who had tried to stop his experiment. He told them that he had preliminary results. The difference in outcomes between the two treatments was not statistically significant, he emphasized, but it appeared that patients might do slightly better in the coronary care units.

The cardiologists “were vociferous in their abuse,” Cochrane later reported. “They said, ‘We always thought you were unethical. You must stop the trial at once.’ ” But then Cochrane revealed that he had played a little trick. The real results were the opposite of what he had said: home care had actually done slightly better than the coronary units. “There was dead silence.”

Unusually high heart-disease rates among prisoners drew Cochrane’s attention to the justice system, where he encountered the same attitudes among prison wardens, judges, and Home Office officials. Cochrane cited the Thatcher government’s “short, sharp shock” approach to young offenders, which called for brief incarceration in spartan jails governed by strict rules. Did it work? The government had implemented it throughout the justice system, making that question impossible to answer with any sort of systematic analysis.

If the policy had been introduced and crime had gone down, that might have meant that the policy worked—or perhaps crime had gone down for any of 100 other reasons. If crime had gone up, that might have shown the policy to be useless or even harmful—or it might have meant that crime would have risen even more but for the policy’s beneficial effects. Naturally, those in power would say the policy worked, and their opponents would say it failed. But nobody would really know. Once again, it was blind men arguing over the colours of the rainbow.

If the government had subjected its policy “to a randomized controlled trial then we might, by now, have known its true worth and be some way ahead in our thinking,” Cochrane observed. But it hadn’t. This was the same toxic brew of ignorance and confidence that had kept medicine in the dark ages for millennia.

Excerpted from Superforecasting: The Art and Science of Prediction. Published by Signal/McClelland & Stewart, a division of Penguin Random House Canada. Copyright 2015 by Philip Tetlock Consulting Inc. and Connaught Street Inc. All rights reserved.

This appeared in the December 2015 issue.